Aug. 4, 2023, 8:44 a.m.

Browsertech Digest: How Modyfi is building with Wasm and WebGPU

Browsertech Digest

Hey folks, welcome to the digest.

Last issue, I wrote about applications that treat documents like files, not rows in a database using Figma’s architecture as an example.

Yesterday, we open-sourced y-sweet, a Rust Yjs server with S3 persistence, which implements the architecture laid out in that post. Try it out!

My conversation with Modyfi

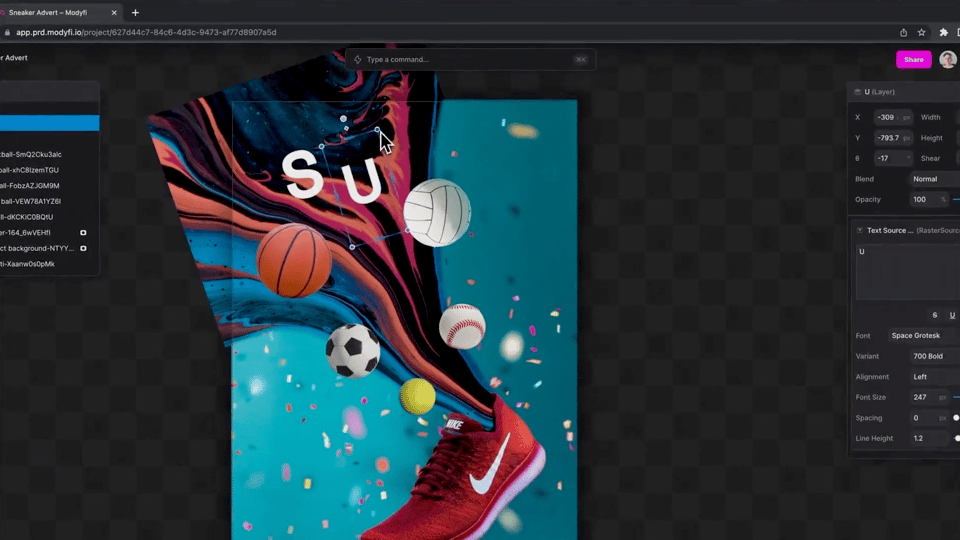

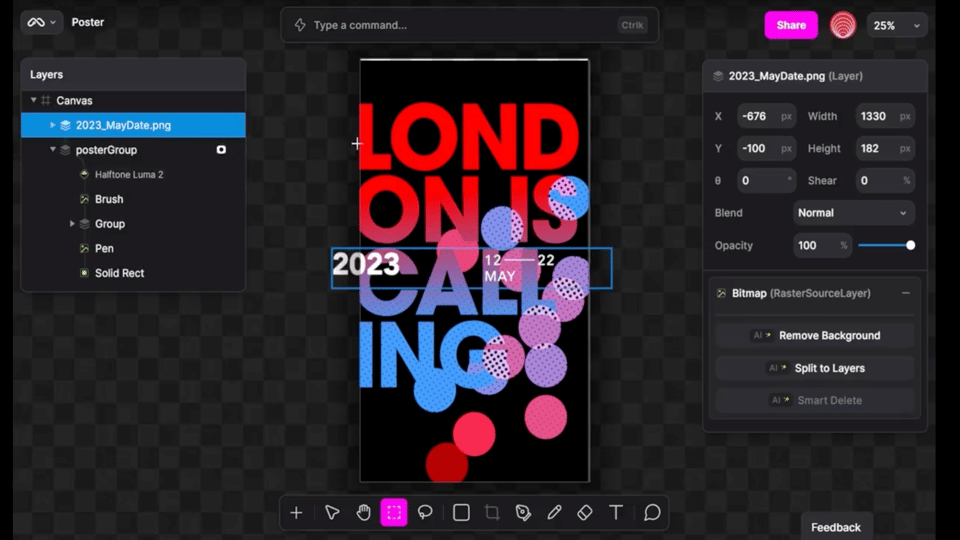

I’ve been keenly following the browser-based image editor Modyfi, which launched officially in June. I had the pleasure of talking with Joe (CEO) and Felix (Engineer) for the digest.

This is the first time I’m trying an interview format for the digest. If you like it, let me know who else you’d like to hear from by replying to this email.

My tl;dr

- Supporting collaboration means no “destructive editing” - instead of modifying pixel values directly, each brush stroke is stored as a mutation that is replayed on each client to render the image.

- The app is split into a Rust+Wasm graphics engine and a React+TypeScript app. The engine is essentially a view on the source-of-truth data, which is a Yjs document on the TypeScript side.

- The engine uses wgpu, cross-compiling to WebGL 2. Soon, it will automatically select between WebGL 2 and WebGPU at runtime.

What Modyfi does

Paul: What does Modyfi do? What’s the origin story?

Joe: We've been using image editing tools for decades now, and they haven't evolved from where they were, really, from that period in time.

Piers [CTO] was on a sabbatical, I was taking a bit of a break, and we got together to go “What if we just rebuilt all of this from the ground up? How would it work?”

So Modyfi is doing for graphic designers what Figma did for product designers. Real time image editing, directly in the browser.

We've got AI capabilities in there. Image generation, but also from what we call image-guided generation. You can put an image in, then it references that and does more fine grained control with the user.

What we're really trying to solve is the process. The process of collaborating is broken from an image editing perspective. And what Google Docs did to Word, or what Figma did to Sketch, Modyfi will do to Photoshop/Illustrator.

Paul: I’d love to talk about the non-destructive editing concept. How did that come about?

Felix: That’s probably one of the technical solutions that we’re most proud of: identifying a way to make collaborative, rich image editing feasible in the first place.

When Joe came to us originally, and was like “why is there no collaborative image editor?”, as graphics programmers we immediately thought: well, there’s about 2,000 reasons why there’s no collaborative graphics editor.

It’s because the bandwidth of pixel diffing. If you approach it from a naive perspective, you think: okay, we want Photoshop to be collaborative. Then you swiftly run into an immense amount of friction in feasibility.

And so we decided to rethink: what does it mean to make images together with other people online, without sending gigabytes of data between potentially hundreds of users at the same time.

My background is originally in architecture, and I was really interested in parametric design tools. There’s a fantastic tool called Grasshopper, which is part of this 3D modeling tool called Rhino. That was kind of my intro to programming in the first place.

And Piers is also a fantastic CG artist and has done some amazing things with a tool called Houdini, which is an industry-standard VFX tool, and again it uses a lot of non-destructive procedural idioms to shape and flow massive datasets using small but sophisticated functions and operations.

So we were both inspired by this and thought, instead of sharing loads of pixel data, what if we just shared these image operations instead? And found some way of representing a document that is very friendly if you’re coming from Procreate or Photoshop?

That’s managed to effectively solve the problem of collaboration, by fully committing to this non-destructive procedural approach, and dressing it up with what looks like simple layers.

The Tech Stack

Paul: What’s the technical stack? How is it organized?

Felix: We’re quite good at compartmentalizing our work. We have an engine team, and we all write Rust and wgpu. And then that interfaces with the TypeScript app.

At the moment our graphics engine runs entirely on the client, but we have made sure to allow it to run natively, which is a huge benefit of using wgpu. We have a whole native test suite which can test all of our image processing things, and it doesn’t need to run in a browser at all. It just runs ultra fast on Metal or DirectX or wherever you want it to.

Note from Paul: wgpu is a cross-platform Rust implementation of the WebGPU API. It has a translation layer to support WebGL 2, which is what Modyfi currently uses.

Paul: How does the engine itself interface with the TypeScript app over the Wasm boundary?

Felix: Rust tooling has some really good libraries for this. We’ve leaned heavily on wasm-bindgen, for example, to share certain types across that boundary.

To keep things as fluid and performant as possible, being aware of how much data is going across that boundary and whether copies are involved is a priority.

We don’t have to share loads of image data across the boundary. If you bring in a new image asset, the engine needs to know about that, so we pass it over [to the engine] once. But then once it’s in, the engine has complete control over your window and canvas. We can process it straight to the screen.

We’re also exploring something called tsify, which is a way to generate TypeScript from Rust types in a slightly different way to the way wasm-bindgen does.

Paul: What’s the breakdown between the engine side and the TypeScript side when it comes to ownership of the screen and the document?

Felix: The TypeScript side basically owns all the document management. We use Yjs. It owns the source of truth for the user’s document.

We use React to render all of our UI, all of the “web elements”. The actual Modyfi canvas, everything in the actual world is rendered directly within wgpu [by the engine].

The engine is told, “ok, this is the document, go and do all the operations needed to give us an output”, but TypeScript doesn’t know at all how to process images. We’ve designed a document structure that lays out a nested tree of layers and operations and modifiers.

The engine also has more responsibilities in terms of interacting with that world. You’re panning and zooming around, and maybe drawing with the Bezier pen tool, clicking and dragging points. All of this is written in Rust.

We found it super useful to basically give the engine more control, and see it as more of a native application that receives pointer events.

Embracing WebGPU

Paul: You’re using WebGL 2 as the backend to wgpu. Are there limitations to that approach? Things you’re excited to switch to WebGPU for?

Felix: To an extent, yes, we are definitely excited to switch over. Compute shaders are the main answer to this. That will afford us new abilities that we don’t currently have with fragment shaders.

But also, there’s so many amazing abilities that we can give users just using fragment shaders and traditional GPU effects.

Joe: Also, running models on the client side. We would have to have that be WebGPU rather than WebGL 2. And performance, we’ll take some more performance.

Paul: Do you have a staging version that is already using the WebGPU backend, or is it more complicated than just swapping them out?

Felix: We’ve definitely built a version of the app for WebGPU. We’ve been doing sporadic testing to make sure we’re moving in the right direction, and I don’t remember it being complicated at all. I think it was literally changing a cargo configuration, maybe one or two things about how we initialize the canvas. I don’t remember it being a huge pain.

We feel confident that we could switch with minimal pain as soon as we’re ready.

Paul: Do you expect to get performance gains for free at that point? Or do all the performance gains come from being able to use additional functionality that you can’t currently, like compute shaders?

Felix: I would expect certain performance gains. There are various workarounds which wgpu does quite elegantly in terms of managing the swap chain. And there’s additional passes required for the WebGL 2 backend, which will go away.

We will see efficiencies in the number of draw calls required. Everything we’ve written has been in terms of command buffers, render bundles… WebGPU idioms. There’s no real benefit to writing code like that for WebGL 2.

We’ll probably see a non-negligible speed-up, but I couldn’t say how much at this point, particularly when we have some pretty intense image effects like refractions and blurs. At that point you’re limited to the raw flops of your GPU.

Building towards interactive AI

Paul: What are you looking ahead to?

Felix: The thing I’m personally most excited about is for the efficiency of the AI models to speed up by an order of magnitude. The abilities and creative potential that we’ll be able to give people if they don’t have to wait 10 or 20 seconds for an image. I think things will really start opening up when you can start manipulating models as they’re running.

Paul: What’s blocking that now? Is it a hardware problem or do the models themselves need to improve?

Felix: Well, I think the model architecture is the thing which is constantly providing the most step changes. There was a point where everyone lost interest in GANs and suddenly everything was about transformers. And diffusion was suddenly producing amazing results.

Now there seems to be a swing back to GANs, because people have found that they just re-architect them a bit, suddenly all these steps required for the de-noising of diffusion just go out the window and you can do it in one [pass].

Final Thought

Paul: Is there anything else you wanted to get out there?

Joe: If you want early access, visit Modyfi.com.

Until next time,

-- Paul