Welcome to the digest.

While a lot of the attention in AI lately has been hosted APIs, people have also been excited about getting smaller versions of these models to run in the browser.

This is appealing for a few reasons. The big one is that AI inference is still expensive to run. Prices are coming down, but the cutting-edge stuff is still prohibitively expensive to put in a freemium product -- if you run it on the server. If you run it on the user's device, you only have to pay for hosting the model weights.

The other major advantage is privacy (data never leaves your machine). Sometimes people also mention latency, but it's a bit overrated -- inference latency will usually dominate network latency anyway.

Model distillation

It will probably never be practical to run the really large-scale general purpose models (GPT, etc.) in the browser, because by the time end-user hardware catches up, the definition of what “large-scale” means will be adjusted proportionately.

But this might not matter much for application-specific use. An application that uses a language model to generate JavaScript code does not derive any benefit from the model weights that encode the atomic number of mercury.

While it's not trivial to just remove the irrelevant weights, it is possible to distill large models into smaller ones. Here's a good blog post that breaks down a paper about distilling language models.

I suspect that we'll look back on this period as the “free meal kits and Uber rides” land-grab era of AI, where VC money pouring in has obfuscated the unit economics of running some of these models by way of generous free token limits and readily available capital.

As the industry matures and the unit economics start to matter, model distillation will become an interesting area to watch.

Inference in the browser

I've previously talked about in-browser inference with TensorFlow.js as well as transformers.js.

A few more approaches have come on my radar recently:

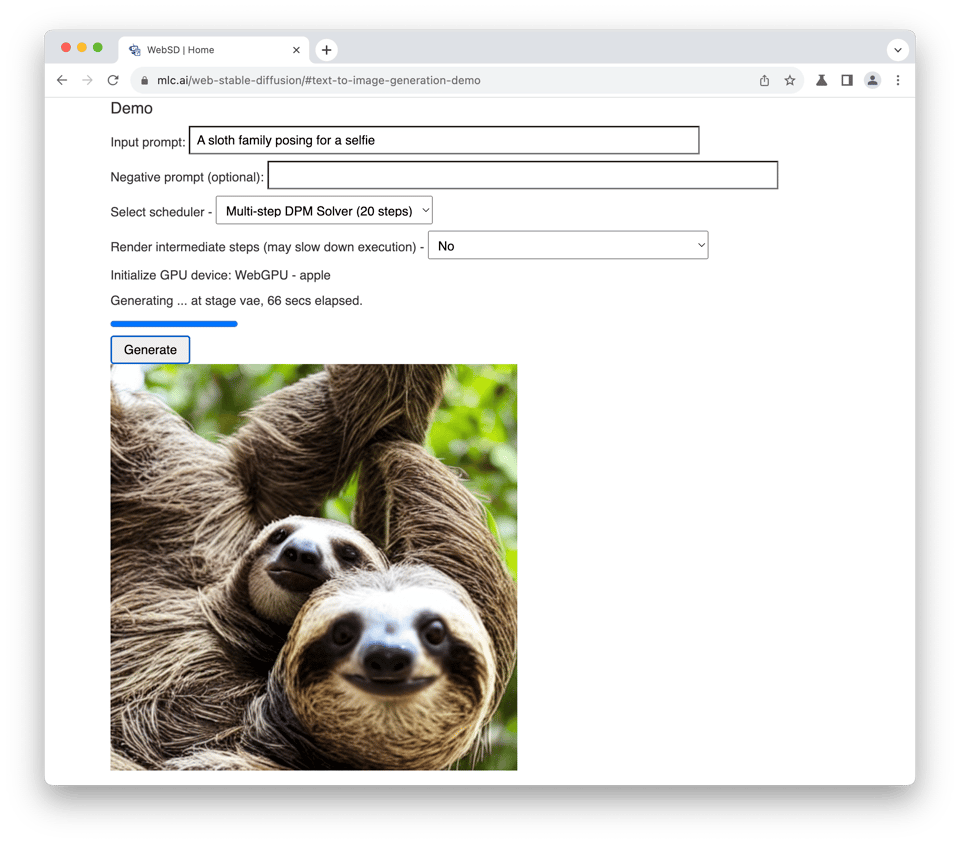

Apache TVM is a project that can compile models from various frameworks (including PyTorch and TensorFlow) into various backends, sort of akin to LLVM but for GPU code. Two recent demos use TVM to implement in-browser Stable Diffusion and an LLM demo based on LLaMA fine-tuned on chats. TVM is used to compile the GPU-side code to WebGPU, and Emscripten is used to compile the CPU-side code to WebAssembly.

WebGPT takes a wonderfully minimalist approach to a similar end. Rather than cross-compiling the models, it just directly implements a bunch of WebGPU kernels in plain JavaScript and WGSL. It doesn't even use a JS bundler! I'm a big fan of how this strips out a bunch of complexity. The repo includes a number of models, including GPT2. There is a web demo that currently requires Chrome Canary or Beta.

WasmGPT throws away the GPU and ports the inference directly to WebAssembly to run on-CPU. It uses Emscripten to port the famous llama.cpp and whisper.cpp by the same author.

CozoDB is a vector database written in Rust that includes a Wasm port. A big use case these days for vector databases is efficient retrieval of text snippets to include as context while constructing LLM prompts.

NYC AI×UX event

We felt FOMO on latent.space’s AI|UX event, so we’re bringing it to NYC on May 17th. We're still hammering out some details (like final capacity) with the venue, but stay tuned for general registration to open up next week.

In the meantime, we're accepting demo pitches! If you know anyone who might be interested, here's a teaser tweet you can send them.

I always appreciate when people flag things of interest to the newsletter -- replies to this email go straight to my inbox. Thanks to Andy of Magrathea, Niranjan of Cedana, and Davis at Innovation Endeavors for flagging things this week.

Until next time,

Paul

You just read issue #19 of Browsertech Digest. You can also browse the full archives of this newsletter.