Hey folks, welcome to the digest.

Gaussian Splatting

Gaussian Splatting is a technique for representing real-world 3D scenes as a point cloud.

The result is similar to a point cloud generated from a LiDAR scan, but with fewer artifacts. This is remarkable given that unlike a LiDAR scan, it doesn’t require depth information, and unlike NeRFs, it doesn’t use a deep neural network.

Two browser-based gaussian splat viewers have popped up recently, one doing all the rendering client-side and another using pixel-streaming.

Browser Rendering

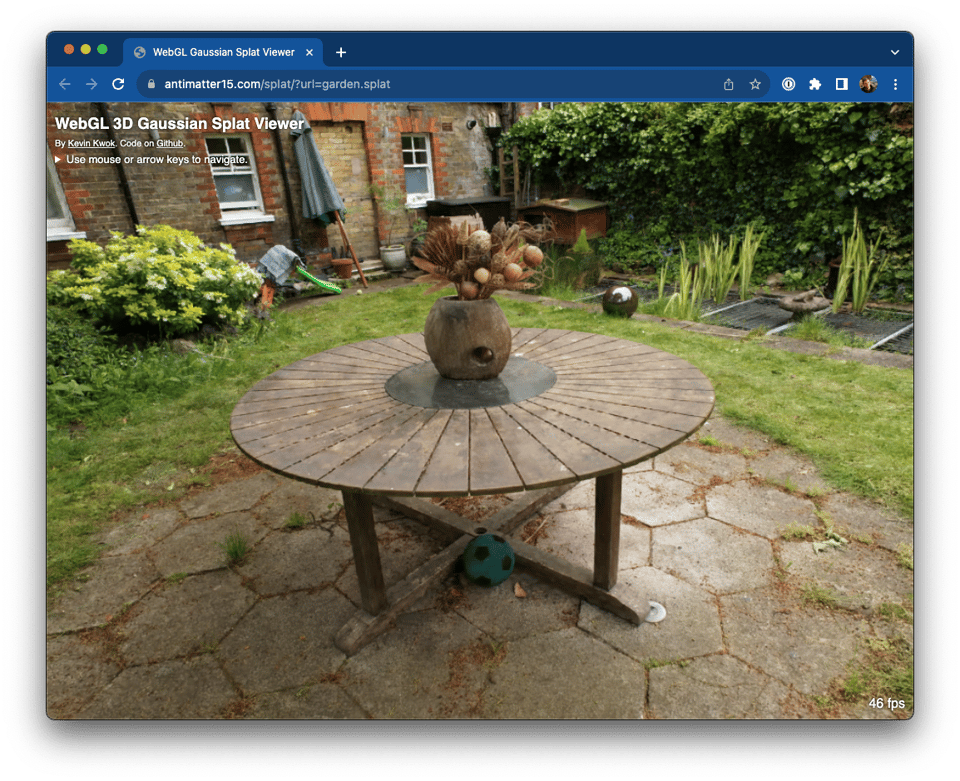

Here’s a WebGL gaussian splat viewer from Kevin Kwok. Its README contains a great discussion of the rendering technique.

Rendering gaussian splats is tricker than a typical point cloud.

In a typical point cloud, the points are opaque. When rendering opaque things, GPUs use a trick where they keep track of both the color and distance (depth) of each pixel. To draw each point, they calculate the pixel it affects, and then check whether a nearer point has already affected that pixel.

This gives a correct result regardless of the order of the points.

With gaussian splats, this doesn’t work, because the points are translucent. To render them correctly, they need to be processed back-to-front. Since the order depends on the camera position, this means the points need to be re-ordered whenever the camera moves.

Kevin points out that most Gaussian Splatting renderer implementations do the sort directly on the GPU with a bitonic sort.

In order to target browsers that do not yet support compute shaders (via WebGPU), Kevin instead opts to sort the points on the CPU. Since this is slow (~4 fps), sorting happens outside of the main render loop in a background thread via a WebWorker.

To keep rendering at a smooth 60fps, the GPU side doesn’t wait for the new point order, and instead uses the last available order. This means that most frames are actually rendered with a stale point order. Kevin takes advantage of the fact that unless the camera has moved a lot, this stale point order will still produce an image reasonably close to the “correct” one.

You can see artifacts of this approach if you swing the camera around wildly, but even then it will self-correct in a few hundreds of milliseconds when the CPU finishes re-sorting the points.

Pixel Streaming

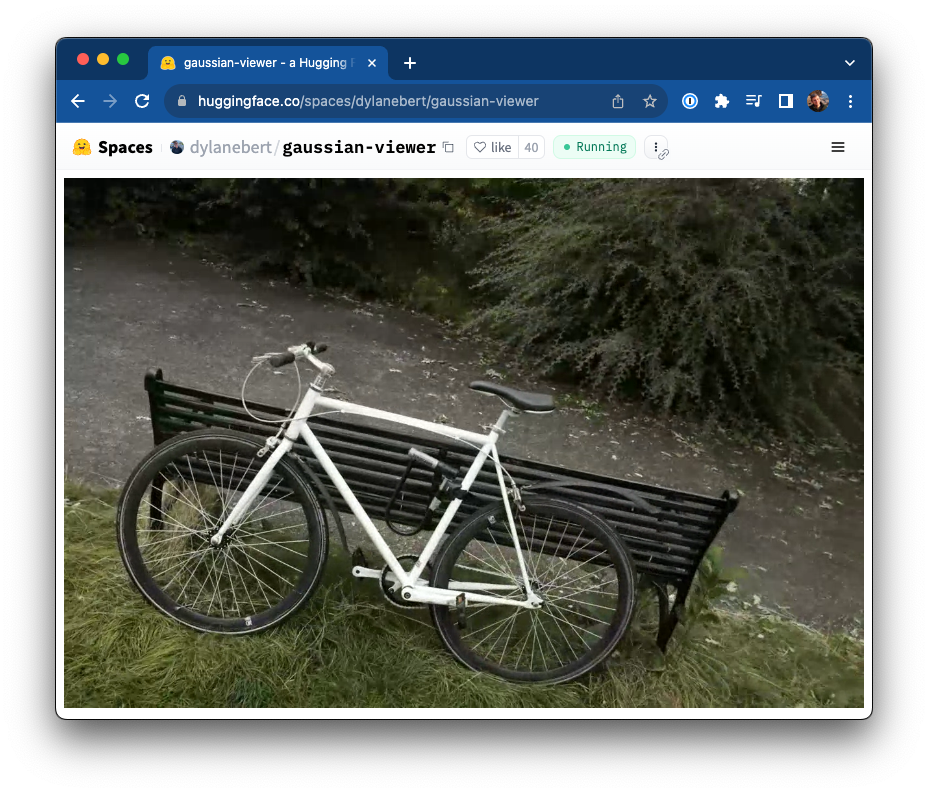

Dylan Ebert of Hugging Face has built another browser-based viewer (repo) that uses pixel streaming over WebRTC instead of running entirely in the browser.

Dylan wrote a short twitter thread about the technique, which takes advantage on-GPU video encoding. This tracks with our own experiments with remote rendering at Drifting, where we found that CPU-side encoding was often a bigger source of latency than rendering or network.

Dylan’s explanation of gaussian splatting is also a great two-minute introduction to the technique.

Porting Descript to the browser

Here’s a SyntaxFM episode with Andrew Lisowski about migrating Descript’s Electron app into the browser.

Some notes I took:

- “When we moved to web, a lot of things were faster. Currently opening a project in the Electron app takes a bit longer than it does on web, because on web we just stream things.”

- On local-first: “Ideally you want a local-first Descript, but do you really want hundreds of gigabytes of videos in your browser’s local storage?”

- Heavy use of WebCodecs, which means it only supports Chromium-based browsers for now, but Safari support expected this year.

- Uses libav.js, which compiles ffmpeg to WebAssembly with Emscripten.

- “We use IndexDB quite a lot”

- “Going from an Electron app to a web app, there’s a lot of things you can’t do any more. […] You have a little bit more access to the system. If you’re moving to the web, you have to start moving more of those things into the backend.” - Gave AI features as an example that needs to run in the cloud.

It’s a good episode, give it a listen.

Other things

- Here’s a great rundown by Kyle Mathews of some of the work going on right now in local-first software.

- I was on the Open Source Startup Podcast talking about Drifting in Space and browser-based apps.

- I’ve been hanging out in the #browsertech channel on our Discord, come say hi!

- Our CRDT-based multiplayer document backend, Y-Sweet Cloud, is now open for registration.

You just read issue #27 of Browsertech Digest. You can also browse the full archives of this newsletter.